Emotion Detection in Images: Comparing CNN Training from Scratch vs. DeepFace Pre-trained Model

The goal is to develop an AI model that can detect human emotions including Angry, Disgust, Fear, Happy, Sad, Surprise and Neutral based an in image as the input.

We have two different approaches here, One is to train a CNN (Convolutional neural network) from scratch using labeled images as training data and the other approach is to use a pre-trained model like DeepFace. In this article we are covering both options and compare the results.

The first step to train a model is gathering the suitable data. In this case we need a dataset of images of faces labeled with the emotion they are expressing. I used msambare's fer2013 dataset from keggle which includes two sets of test and train data with thousands of 48x48 images categorized in different directories labeled by the emotions. We create the model using TensorFlow's Keras API and set up data generators that load and preprocess images from the specified directory then apply data augmentation (like rotation and flipping) and split the data into training and validation sets. Afterward we pass the now properly formatted data to the model we created and start the training process.

It's worth noting that we set the input layers shape size to 48x48 for several reasons:

- fer2013 is offering its images in this size

- While 48x48 may seem small, it's generally sufficient to capture the key facial features necessary for emotion recognition

- The small size forces the model to focus on the most important facial features for emotion recognition, rather than potentially irrelevant background details.

However, with modern hardware and deep learning techniques, it's possible to work with larger image sizes if needed.

Some researchers now use larger sizes (e.g., 96x96 or 224x224) for emotion recognition, especially when applying transfer learning from models pre-trained on larger datasets.

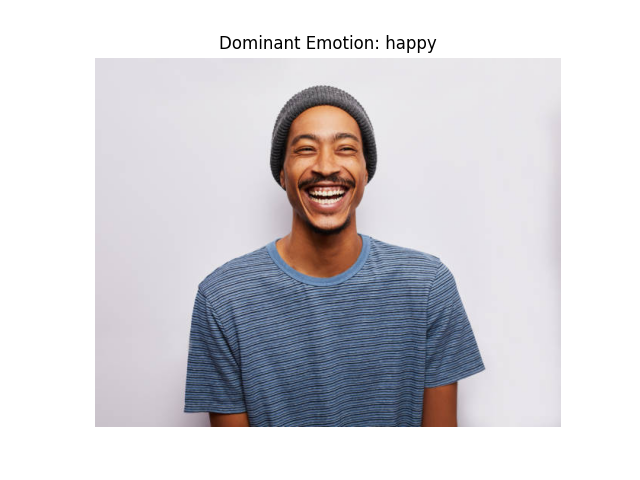

After that we also the training and validation accuracy/loss over epochs using plots and at last we pass an image path to use the now trained model to check the results (figure-1).

The second approach which is using the DeepFace library, is much simpler as we just pass the image we want to it and get the emotion the model is the most confident about. It actually returns an object with 4 keys:

- emotion: an object consisting of the percentage of certainty of each emotion summing up to 100

- dominant_emotion: It's the emotions with the highest certainty percentage

- region: coordinates of the detected face

- face_confidence: the certainty of having [found] an actual face in the image

Conclusion

This project explored two approaches to emotion detection in images: training a CNN model from scratch and using the pre-trained DeepFace model. Training a CNN provided valuable insights into model customization and required careful data preparation, while DeepFace offered a faster, ready-made solution with impressive accuracy. Both methods have their strengths—custom CNNs allow for model tuning and adaptation, while pre-trained models like DeepFace simplify implementation. Overall, choosing between these approaches depends on project needs, available resources, and desired control over the model's training and performance.